Hi readers!

Bernard Marr is one of the world’s most successful social media influencers at the intersection of business and technology. He once said something funny about artificial intelligence, but it is thought provoking. He said,

“The sad thing about artificial intelligence is that it lacks artifice and therefore intelligence”. “Forget artificial intelligence in the brave new world of big data, it’s artificial idiocy we should be looking out for”. “Before we work on artificial intelligence why don’t we do something about natural stupidity”?

I have blogged on this topic at least four times and currently I am vlogging on this same topic, but what forced me today to blog again on this topic is a statement from “The Godfather of Artificial Intelligence” who resigned Google saying that,

“He regrets his life’s work because it can be hard to stop bad actors from using it for bad things”.

Prathna Prakash of “Fortune” reported this on May 1st, 2023, saying that,

“Geoff Hinton quits Google, says he regrets his life’s work”

He further said, The God Father of Artificial Intelligence (AI) “Geoffrey Hinton” was the pioneer of some of the key concepts being used today to empower AI like CHATGPT: a sisterly/brotherly model to Instruct Generative Pre-trained Transformer: GPT which is a type of AI deep learning model that was first introduced by Google in 2017, and was trained to follow instruction promptly and provide a detailed response and is being used by millions of people today. But, 75 years old Hinton says, he regrets the work for which he has devoted his life because of how AI could be misused.

In an interview published in New York Times on May 1st, 2023, the Godfather of IA said, “It is hard to see how you can prevent the bad actors from using it for bad things.” I will console myself with the normal excuse: If I hadn’t done it, somebody else would have.”

The question is why Hinton is often referred to as “the Godfather” of AI?

The reason is that he spent years in academia before joining Google in 2013 when Professor Geoffrey Hinton and two of his graduate students sold their startup company “U of T” to Google Inc., for $ 44 million. At that time, he told the “Times” that tech giant (Google) has been a “proper steward (supervisor)” for how AI technology should be deployed, and that the (The Google) has acted responsibly on her behalf.

But 10 years later, he left the company in May 2023 in order to speak freely and openly about the “dangers” of artificial intelligence about:

“how an easy access to AI text and image generation tools could be used to make fake or fraudulent content, and how the average person would “not be able to know what is true or not anymore.”

Dear readers! Do you remember my blogs posted already at links mentioned below in which I have mentioned identical apprehensions? If not, please read it again.

Also please read my blog again on the following link

https://amagricon.com/2022/06/is Artificial Intelligence a threat to humanity? Data and AI part 4.

In this blog I mentioned something similar to the apprehensions of Geoff Hinton. For example, I wrote,

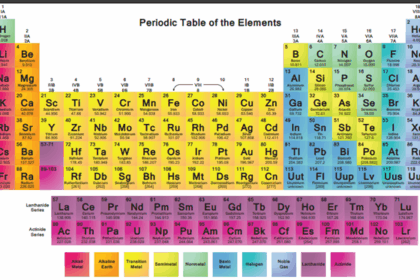

Now when we talk about “Threats from AI” which AI are we talking about? There are three types:

Artificial narrow intelligence (ANI) which is the most common form of designed to solve one single problem like recommending a product for e-commerce users or predicting the weather. It is capable to come close to human functioning in very specific contexts and the one that could easily be found in the market,

Artificial general intelligence (AGI) possesses intellectual functions of humans in various domains comprising language and image processing, computational functioning, and reasoning. AGI comprises thousands of ANI systems working in tandem communication with each other to mimic human reasoning, and

Artificial super intelligence (ASI): a progression of AGI that surpass all human capabilities such as decision making, taking rational decisions and even things like making better art and building emotional relationships.

Once the objective of AGI is achieved, AI systems can quickly improve its capabilities and move into domains currently beyond our comprehension as gap between AGI and ASI is relatively narrow (nanosecond) because AI learns very fast. Such perceptions that AI is being endowed with human like intelligence forced some people to consider AI a danger to humanity if it allowed to progress “unabated” and the most commonly felt threat is mass unemployment.

So, first a field was invented that can progress rapidly without involvement of human beings and now apprehensions are mounting to take care of unemployed human and danger to humanity.

Whether the first perception is worth considering or the last? No one can decide as confusion is all over. Where lies the problem?

Is it with human vision, which is different from revelation?

Foresight which is different from intuition? and/or

Decision making which is based on prevision?

No one realized this before reaching where are now. But, since AI is everything and is everywhere in business arena, boosting new products development, its endless propagation warrants its conscious deployment especially when Machine Learning (ML) assumes a larger role in how work is done and force people to think if AL is a threat to human existence?

I quoted the example,

The breakdown of Facebook and WhatsApp (due to “faulty configurational change”) just for few hours and the consequential monitory losses made everyone shocked as the world at large was not prepared for this. Mark Zuckerberg losses $ 9 billion in net worth due to Face book rear outrage and due to stock falls on October 4th, 2021 (Bloomberg and Business Standard). Besides, 3.5 billion users around the world were also affected through disrupted travel plains, Zoom, WhatsApp and Skype meetings and calls, Instagram, Messenger and what not leaving behind a question of the questions:

“Is digitalization” of everything the answer to every problem? or is it a fight between competitive technologies? From going offline to coming back online: 6 hours gap left a message in between. Someone must have read it?

Geoffrey Hinton concerns surrounds the improper use of AI that have already become a reality. For example, Fake images of Pope Francis in a white puffer jacket made headlines on social media, some time ago, and deep fake visuals showing China invading Taiwan, and banks failing under President Joe Biden if he is reelected were published by the Republican National Committee.

Since Google, and Microsoft are upgrading their Artificial Intelligence products, they are urged to pay attentions to the calls for slowing the pace of new developments and regulating the space that has expanded rapidly in recent months. In March 2023, top names in the tech industry, such as Apple cofounder Steve Wozniak and computer scientist Yoshua Bengio ask for a ban on the development of advanced AI systems. Hinton also believes that companies should think before upgrading AI technology further.

Hinton is also worried about how AI could change the job market by rendering nontechnical jobs irrelevant and will take away drudge work (something that I also mentioned in my blog).

Google commented on Geoffrey’s interview saying, that “Geoffrey has made foundational breakthroughs in AI., and we appreciate his decade of contributions at Google.” The company’s chief scientist told Fortune that as one of the first companies to publish Artificial intelligence principles, we remain committed to a responsible approach to the field. We’re continuously learning to understand emerging risks while also innovating boldly.”

Artificial Intelligence and Hinton’s contribution

Hinton began his career as a graduate student at the University of Edinburgh in 1972. That’s where he first started his work on neural networks: mathematical models that mimic the workings of the human brain and is capable of analyzing vast amounts of data. His neural network research was the breakthrough concept behind the company he built with two of his students called DNNresearch, which Google ultimately bought in 2013. Hinton won the 2018 Turing Award: an equivalent of a Nobel Prize in the computing world together with his two other colleagues for their neural network research, which has been key to the creation of technologies including Open AI’s ChatGPT and Google’s Brand chatbot.

As one of the key thinkers in AI, Hinton sees the current moment as “pivotal” and ripe with opportunity. In an interview with CBS in March, Hinton said he believes that AI innovations are outpacing our ability to control it and that’s a cause for concern. He further told CBS that,

“It’s very tricky things. You don’t want some big for-profit companies to decide what is true.” Until recently, I thought it was going to be like 20 to 50 years before we have general purpose AI but now, I think it may be 20 years or less.”

“Nobody phrases it this way, but I think that artificial intelligence is almost a humanities discipline. It’s really an attempt to understand human intelligence and human cognition” said Sebastian Thrun: a German American entrepreneur, educator, and computer scientist.

That’s all for now dear readers.

See you next week. Till then, take care, Bye.